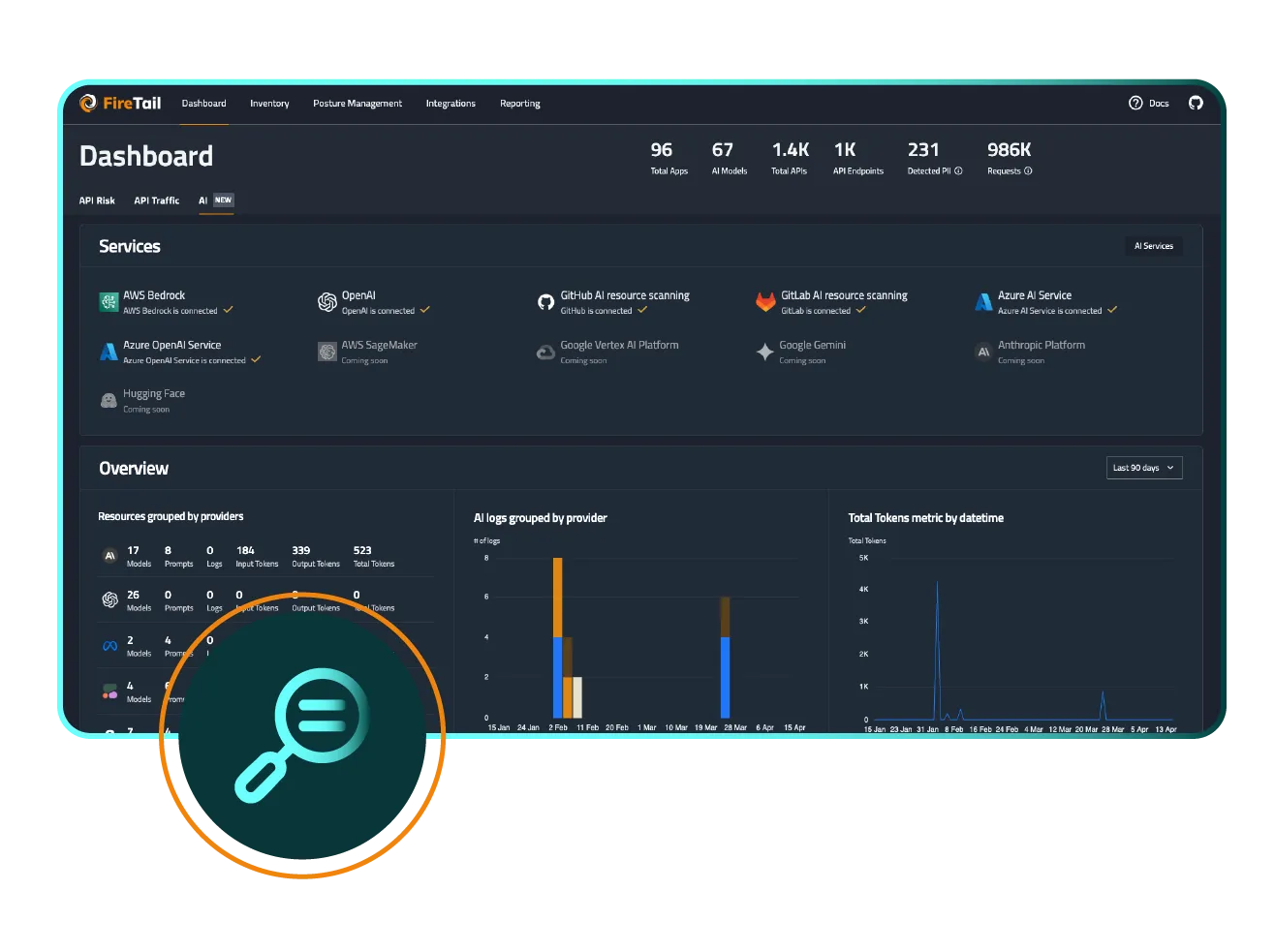

Use centralized LLM log data to power real-time threat detection and rapid incident response. Identify risks like PII leakage, jailbreaks, and policy violations across all AI usage. FireTail offers a unified AI detection and response solution.

With normalized logs from all major LLMs and AI providers, you can write a single detection rule for threats like PII exposure, and it will apply across your entire AI ecosystem.

FireTail monitors logs continuously and flags threats such as prompt injection, jailbreaking, misuse of AI tools, and abnormal activity, instantly alerting security teams.

Security teams can investigate LLM-related incidents using rich, structured logs with full context; who prompted, what was sent, and what the model responded with.

Based on detection rules and defined guardrails, FireTail can trigger automated responses like blocking future use, revoking access, or flagging users for follow-up action.

Security Architect @ Global Enterprise

Get Started.webp)

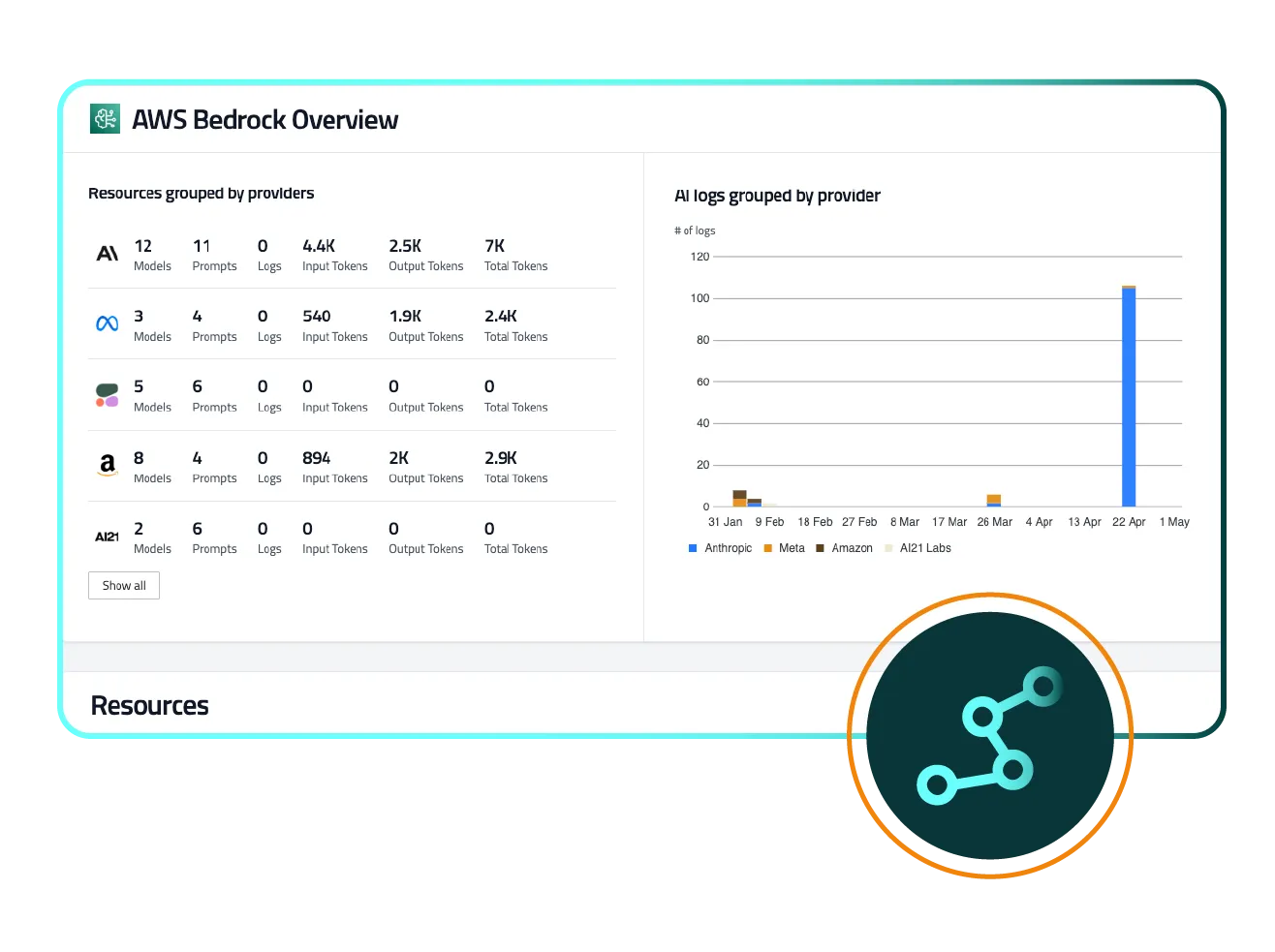

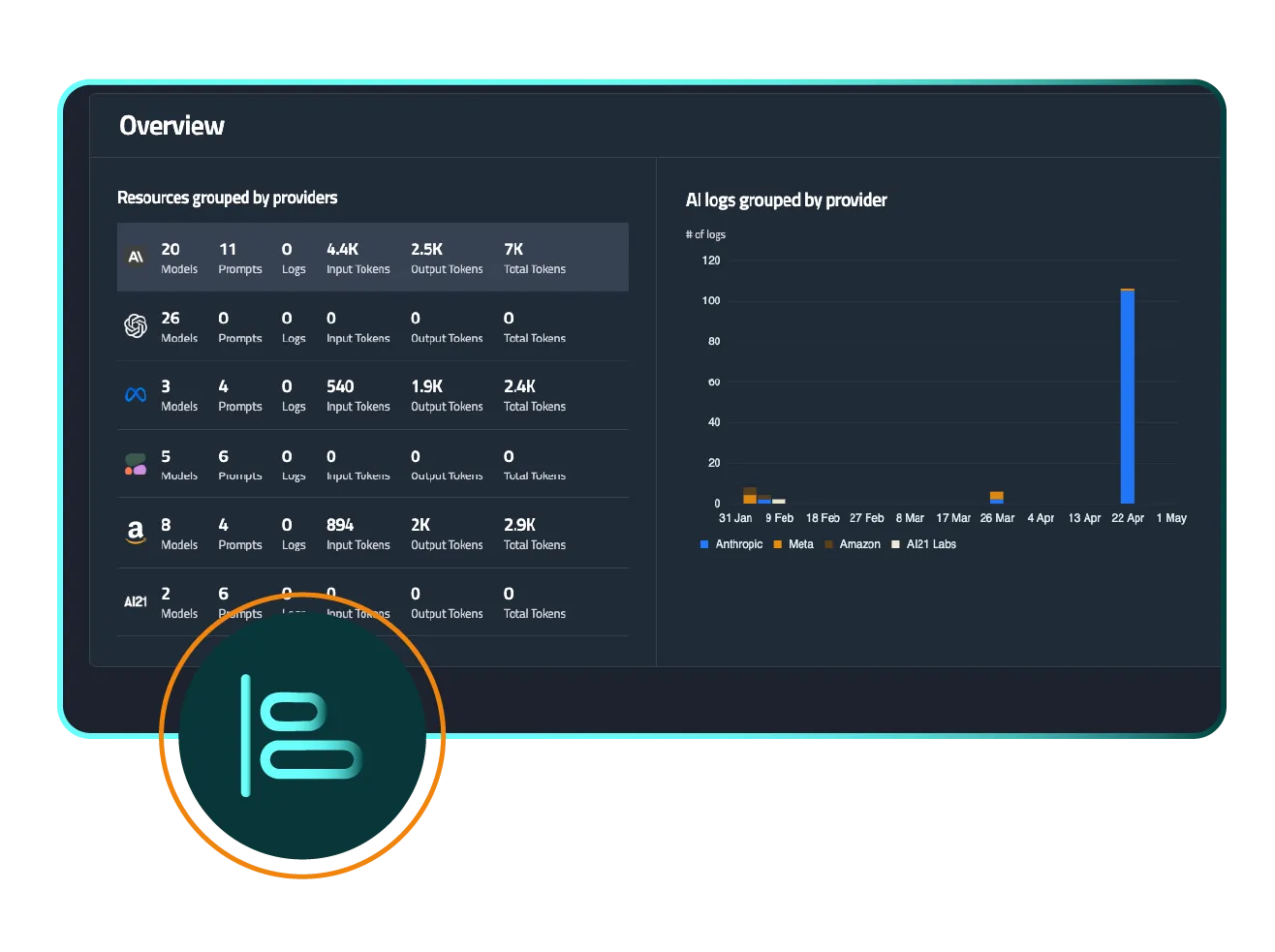

Organizations using multiple LLMs often lack unified detection and alerting. Each provider’s logs are in different formats, with different data elements, making it hard to detect threats like PII exposure or jailbreaks quickly and consistently.

FireTail normalizes logs from all AI providers into a single schema and log stream, enabling consistent threat detection logic. Whether it’s prompt manipulation, data leaks, or rogue behavior, one rule can find it all.

Centralizing detection and response reduces risk, speeds time-to-containment, and gives security teams confidence that no AI usage is falling through the cracks.

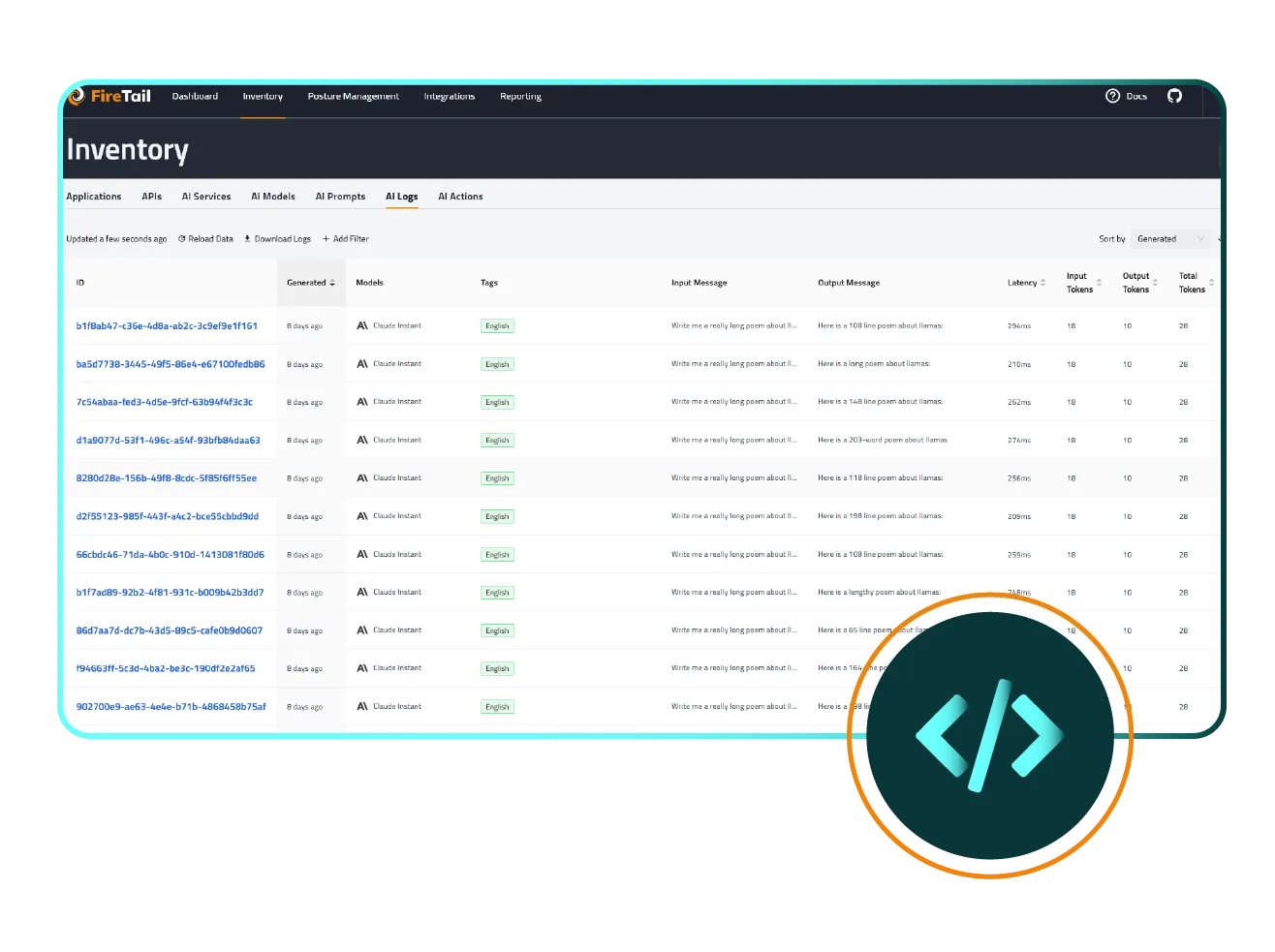

FireTail is designed to get your AI security program up and running fast. Thanks to our unified logging architecture, you can integrate multiple LLM providers and begin capturing meaningful data in minutes.

_Prompt%20Code.webp)

Our experts then work with you to tailor detection rules and guardrails to your specific use cases, so you get actionable insights right away. As your AI footprint grows, we help you adjust policies and refine alerts, turning every incident into an opportunity to strengthen your defenses.

Find answers to common questions about protecting AI models, APIs, and data pipelines using FireTail’s AI Security solutions

FireTail normalizes logs from all major large language model (LLM) and AI providers into a single schema, so you can apply one detection rule across your entire AI ecosystem. This unified approach eliminates the need to manage separate rules for each provider and enables consistent, cross‑platform threat detection.

The platform continuously monitors AI activity and looks for prompt injection, jailbreaking, misuse of AI tools, data leaks and other abnormal behaviors. When a policy violation is detected, it immediately alerts security teams so they can take action before the issue escalates.

Yes. Based on your configured detection rules and guardrails, FireTail can automatically block future use, revoke access for offending users or flag incidents for follow‑up. Automated enforcement helps contain threats quickly and ensures consistent policy compliance without manual intervention.

By centralizing logs and detection logic into a single platform, FireTail applies the same threat detection rules across all of your AI providers and models. This reduces risk by providing complete coverage and faster time‑to‑containment, giving security teams confidence that no AI usage is falling through the cracks.