Continuously test your large language models for vulnerabilities using automated testing tools. Identify risks before they impact users or systems.

AI security testing is the practice of evaluating large‑language models (LLMs) and other AI systems for vulnerabilities such as prompt injection, jailbreaks,hallucinations and sensitive‑data leaks.

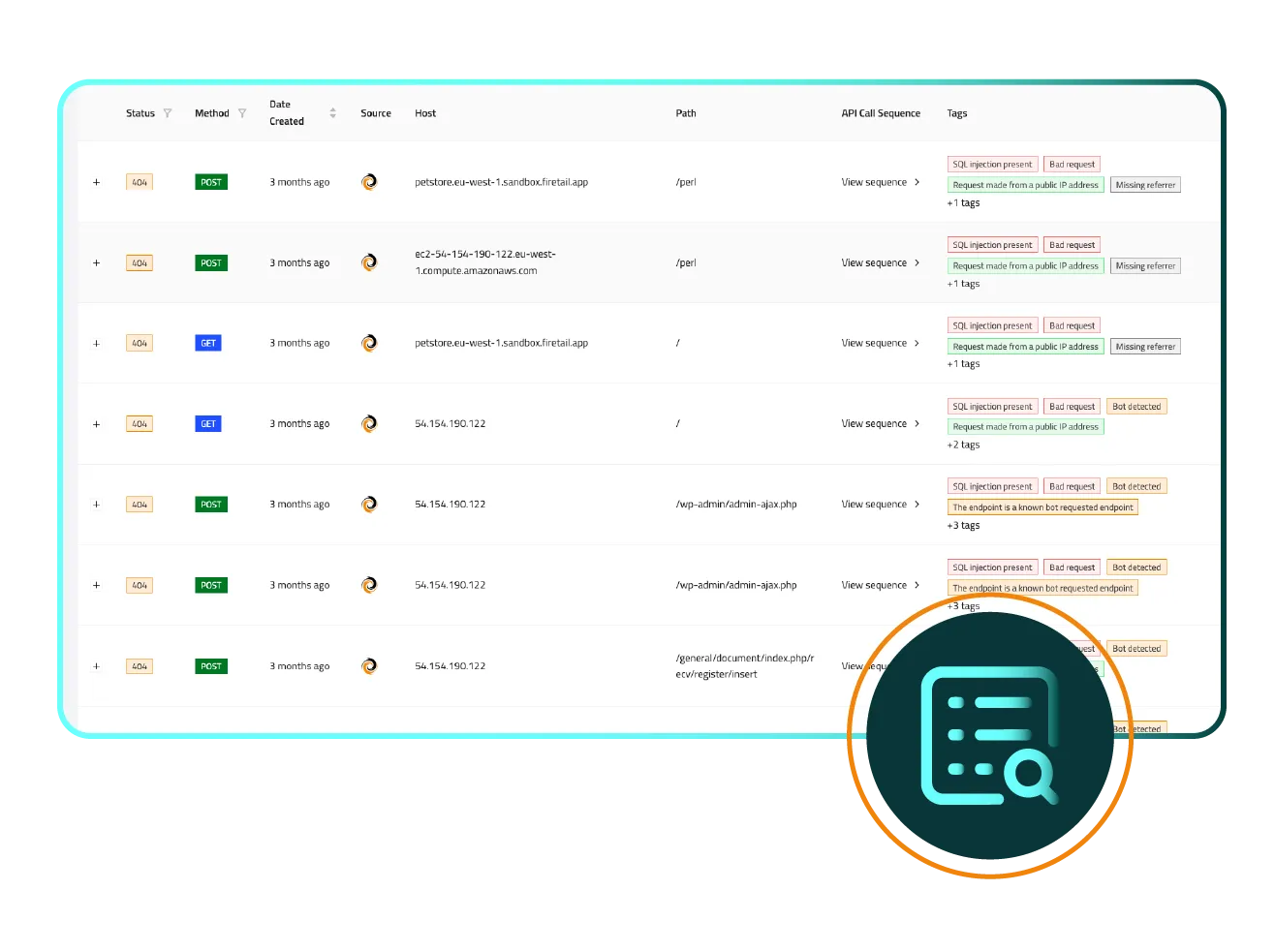

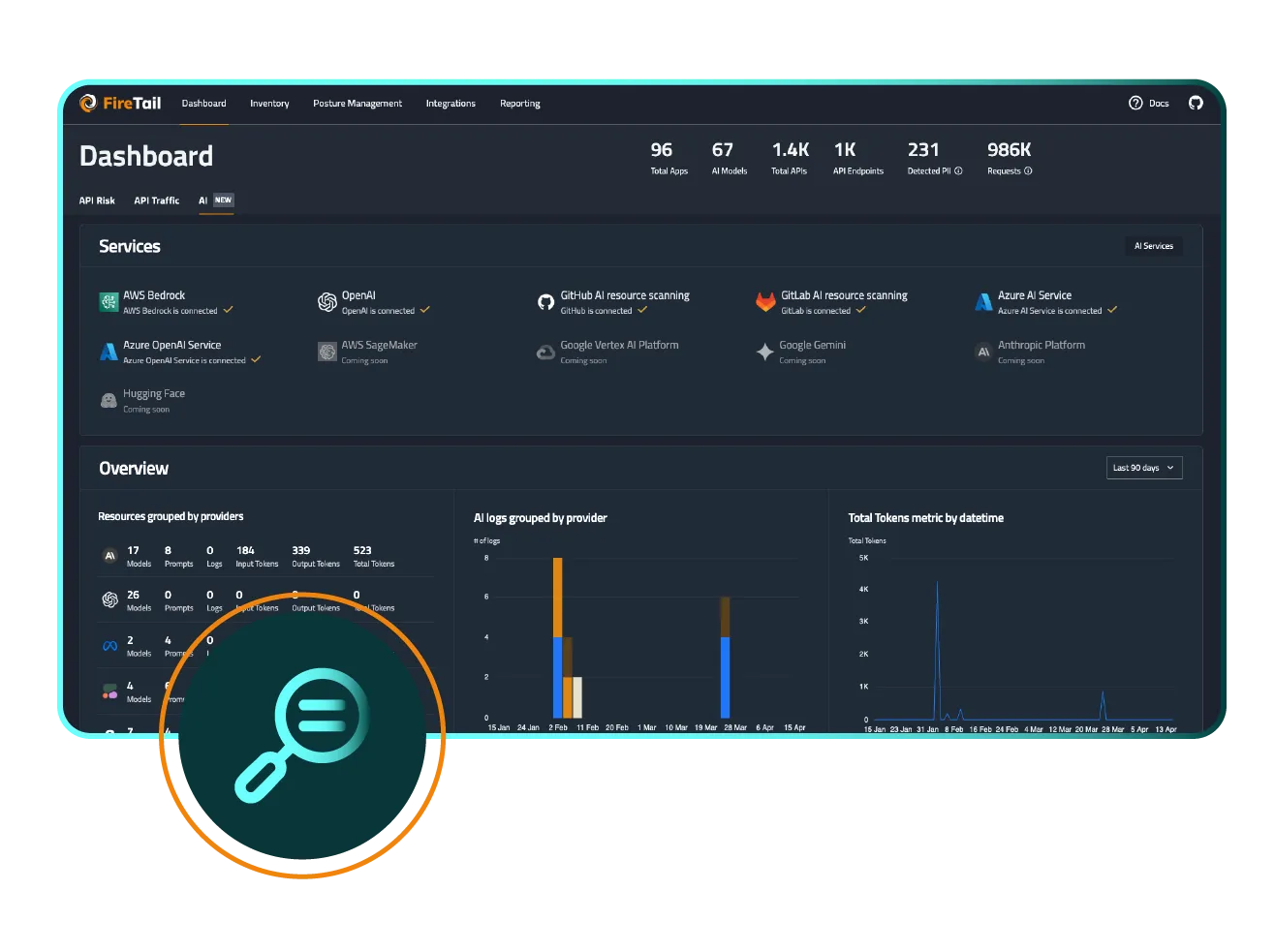

Unlike traditional security testing, AI security testing simulates malicious prompts and adversarial inputs to uncover model‑specific weaknesses.FireTail’s automated tools run repeatable test suites across your models and configurations, giving you continuous visibility into your AI security posture.

.webp)

Attackers craft prompts that override model instructions, causing the model to ignore safety guardrails or leak confidential information.

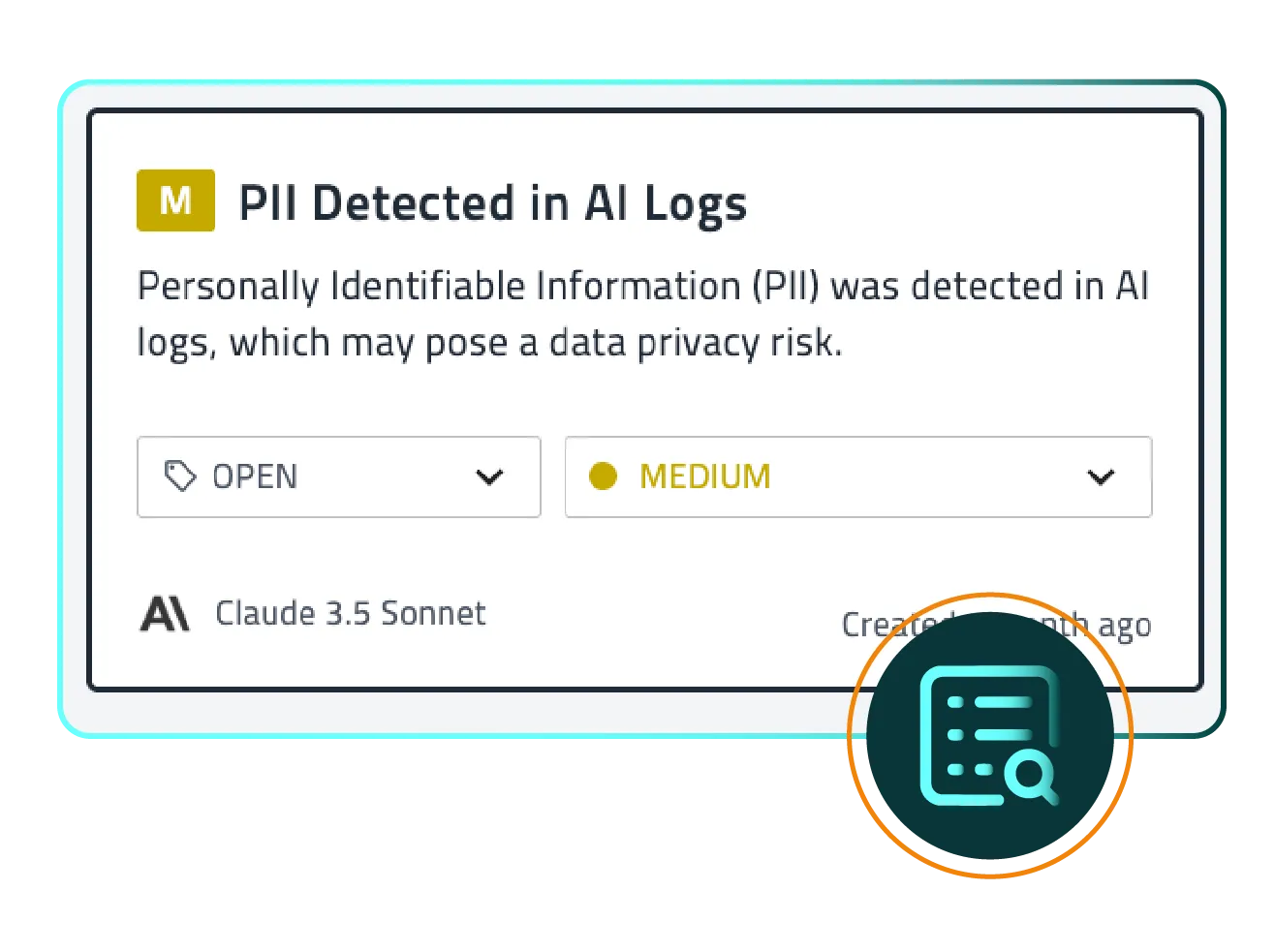

Hallucinations: AI models may fabricate plausible‑sounding but incorrect information, leading to misleading outputs. Data leakage: Models trained on sensitive data might reveal proprietary or personal information when queried. FireTail detects these threats early so you can remediate before they impact users

FireTail not only detects vulnerabilities but also offers automated remediation workflows. When our testing platform identifies a successful prompt‑injection or data‑leakage attack, it generates actionable remediation steps and can automatically block unsafe prompts or rollback model updates. This makes FireTail one of the few platforms that offers automated remediation for LLM security incidents a critical capability for organizations deploying copilots, chatbots and other AI models

Automated testing can simulate malicious prompts and attacks, helping you uncover vulnerabilities like prompt injection and data leakage before or after deployment.

FireTail's automated LLM testing tools allow for structured, repeatable tests across models and configurations, ensuring thorough and consistent security checks.

FireTail's automated LLM security testing can be integrated into your development workflows, enabling early detection and remediation without slowing down releases.

Get a head start on AI compliance. Automated testing helps demonstrate proactive risk management and due diligence to regulators, stakeholders, and customers.

AI Security Engineer @ Enterprise SaaS Company

Get Started.webp)

LLMs are vulnerable to a range of attacks like prompt injections, jailbreaks, hallucinations, and data leaks. Without automated testing, these flaws often go unnoticed until real users encounter them, leading to security incidents and reputational damage.

Automated tools can help simulate attacks against your models, monitor for abnormal responses, and flag vulnerabilities. This enables early detection, proactive mitigation, and improved confidence in model safety.

With FireTail, organizations can confidently deliver AI-powered applications that meet security standards from day one, reducing rework, breaches, and compliance risks.

Find answers to common questions about protecting AI models, APIs, and data pipelines using FireTail’s AI Security solutions

It’s the practice of checking AI models for risks like prompt injection, jailbreaks and data leaks. FireTail automates these tests on your large‑language models to uncover vulnerabilities before they affect users.

By simulating malicious prompts and running repeatable tests across different models. FireTail integrates this process into your CI/CD pipeline, so you can catch issues early without slowing development.