A View from the C-suite: Aligning AI security to the NIST RMF

The NIST AI Risk Management Frameworks builds on what the Cybersecurity Framework initially set up and adapts it to the new risks introduced by AI.

In 2025, the AI race is surging ahead and the pressure to innovate is intense. For years, the NIST Cybersecurity Framework (CSF) has been our trusted guide for managing risk. It consists of five principles: identify, protect, detect, respond, and recover.

But with the rise of AI revolutionizing cybersecurity as we know it, the CSF is no longer enough.

The AI security landscape

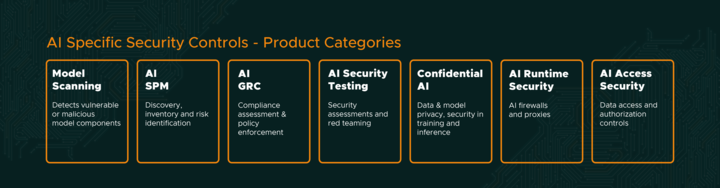

In 2025, AI has introduced a number of new risks and security capabilities have emerged to help organizations address these risks:

Many see these risks and skip to “AI Runtime Security.” An inline, runtime tool can be very good at its specific job, but it leaves us dangerously exposed in almost every other area.

Relying on an inline approach leaves gaps in the framework as it fails to address all five principles of the CSF: identify, protect, detect, respond, and recover. Let’s examine some of the shortcomings of this strategy, specifically when examined through the lens of the CSF:

- Identify

It can only identify the things that it sees. Every interaction point needs to have this runtime security inserted, and then that risk can be seen and identified. With high rates of shadow AI, this may not be a sufficient approach. - Protect

This is where a runtime strategy shines - inline, runtime security can block things using a variety of approaches. This is a win, but only for the interaction points where it’s implemented and configured properly. - Detect

Runtime strategies can be effective here, as they may have observability data. However, this goes back again to the question of install base. Other detection approaches, that aggregate observability data from various sources, will likely be more effective in larger and complex organizations. This is especially true with AI where experimentation is the current status, and new AI use cases and environments are launched weekly. - Respond

Responding to issues is mostly an organizational and process question, and both runtime and post-runtime approaches can be effective. - Recover

Recovery is arguably the fuzziest and least well-defined of the categories. Recovery will depend largely on the objectives of the organization. Again, both runtime and post-runtime strategies can be effective here.

The AI RMF

With the unique risks introduced by AI, including model theft, data poisoning, and emergent adversarial attacks, a more specialized approach is required. This is where the NIST AI Risk Management Framework (AI RMF) comes in. The RMF is not a replacement for the CSF but it builds upon the foundation set up and adapts it for our current landscape. It was developed to build on what the CSF had set up previously, and adapted to fit our current landscape, which has been transformed by the rise of AI.

Unlike the CSF, the RMF has 4 core principles:

- Map

- Measure

- Manage

- Govern

The RMF takes into account the new challenges we are seeing growing every day. While the CSF provided a great foundation, the RMF is much more applicable to the kinds of attacks we are seeing in 2025.

So how can we apply the knowledge from the RMF to our own cybersecurity postures?

Map

Security teams should do a thorough sweep to recognize context and the associated risks. Without adequate mapping infrastructure, shadow AI and other vulnerabilities thrive. After all, if you can’t see it, you can’t secure it.

Actionable steps: To get the full visibility needed, the first investment should be in AI Security Posture Management (SPM). Organizations need a centralized platform that can automatically discover and inventory their entire AI ecosystem to stay on top of threats.

Measure

After identifying risks, security teams should measure their scope by assessing, analyzing or tracking them to better understand and know how to respond.

Many organizations skip this step and focus on runtime defense, creating a "brittle shield" but this approach only addresses the attack attempt, not the underlying vulnerability that made it possible. Therefore, just using inline or a proxy approach alone is not enough.

Actionable steps: Invest in proactive testing. Scan models for vulnerable or malicious components in the AI supply chain before they hit production. Embrace continuous AI Security Testing and red teaming to identify weaknesses before adversaries do.

Manage

Teams must evaluate risks in order to prioritize and act upon the projected impact of each one in order of importance in order to continually stay on top of security.

Actionable steps: Build a layered, multi-step defense. A single line of defense is a single point of failure. An adequate defense should include: AI Runtime Security, AI Access Security, and Confidential AI. The three should work together to create a holistic approach to AI security.

Govern

This is often the most overlooked area. An organization might have a runtime tool but no incident response playbook for an AI-specific breach, leaving them with no safety net at all when a serious incident occurs.

Actionable steps: Governance should be integrated and automated. An AI GRC platform can help define security policies and enforce them automatically across the entire AI lifecycle ensuring that security is embedded and not an afterthought.

Overall, the NIST has developed two frameworks for cybersecurity and both can be helpful tools, but the recent AI RMF is especially relevant in today’s risk-filled environment. FireTail is incorporating the steps outlined in the RMF into our platform.

FireTail is equipped with a centralized inventory and dashboard where you can map all your AI interactions in one place. It has detection and response capabilities that allow you to assess risks and respond in real-time.

- To learn more, sign up for a free trial or schedule a demo today!

Other resources:

The OWASP Top 10 Risks for LLMs is another great place to read about the types of risks organizations face with their AI.

Check out our guide, Beyond the Basics: A C-Suite Guide to Prompt Injection Attacks (https://www.firetail.ai/blog/c-suite-guide-prompt-injection) which tackles risk number #1 on the OWASP Top 10 list.

Explore the principles of strong AI governance here: Building a Culture of Trust: The Keys to Effective AI Governance (https://www.firetail.ai/blog/keys-to-ai-governance)