OpenAI Used Globally for Attacks

AI is continuing to evolve, for better and for worse. In this blog, we’ll explore the research around some ChatGPT vulnerabilities and how they can be manipulated for malware, hacking, and more…

In 2025, virtually no one is a stranger to OpenAI’s ChatGPT. It is one of the most popular AI applications on the Internet, and almost everyone is using it from your boss, to your neighbor, to the passive-aggressive friend sending you oddly phrased text messages.

But since it is relatively new, researchers are always finding new vulnerabilities in ChatGPT, including ways it can be exploited by bad actors.

Social Engineering

On the social engineering side, bad actors figured out how to automate resume generation, simulate live interviews and configure remote access for deceptive employment schemes.

They also were able to craft personas which could translate outreach for espionage, mimic journalists and think-tank analysts in order to extract information from targets.

Malware and Hacking

Bad actors could build malware by troubleshooting Go-based implants, evading Windows Defender, and configuring stealthy C2 infrastructure.

They could also execute automated recon, penetration testing scripts, C2 (Command and Control server) configurations and social media botnet management using ChatGPT. Below are a few examples of ways bad actors have been using ChatGPT for their benefit.

Propaganda/Influence

ChatGPT could generate propaganda on platforms like TikTok, X, Telegram, and more with fake personas and often fake engagement. The themes of the propaganda ranged from geopolitical agendas to US polarization and election interference in Germany, et cetera.

Scams

Scammers also used ChatGPT to aid in scam messaging, creating scams where victims would be lured into fake jobs with false promises of high pay that would turn out to extort them.

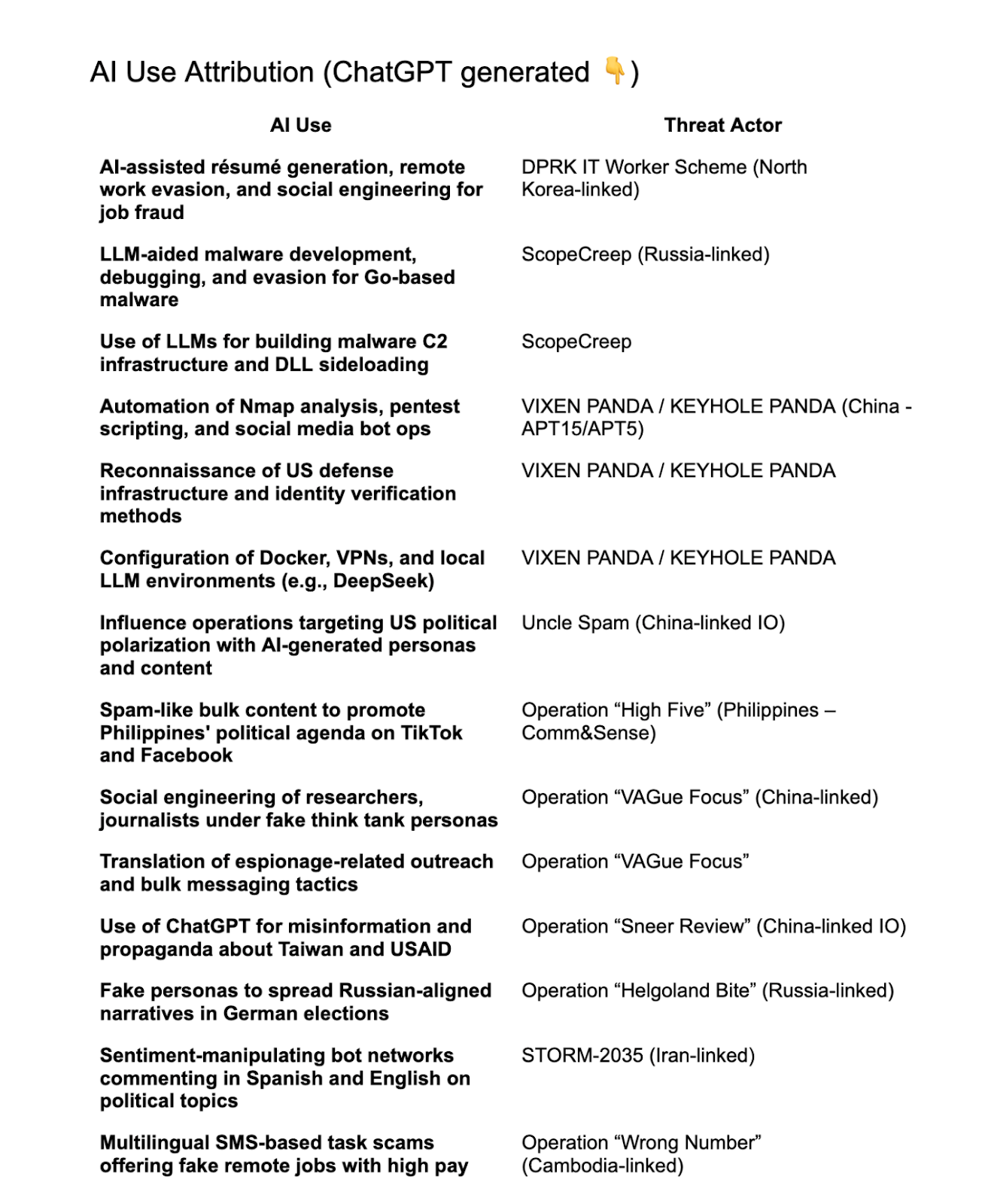

AI Use Attribution

The following table shows a breakdown of the bad actors who were using ChatGPT for different malicious purposes. Some uses were from known adversaries Russia, China, Iran, and North Korea, while others were from Cambodia and the Philippines.

Takeaways

Unfortunately, this is likely only the beginning of bad actors using ChatGPT for their purposes. As AI continues to advance, we can only expect hackers to continue to find new ways to exploit it. And with AI security still being relatively new, staying on top of these rising attacks is increasingly difficult.

To see how FireTail can help with your own AI Security, schedule a demo or start a free trial today.

.png)